Empowering privacy-preserving LLM training with

secure multi-party computation.

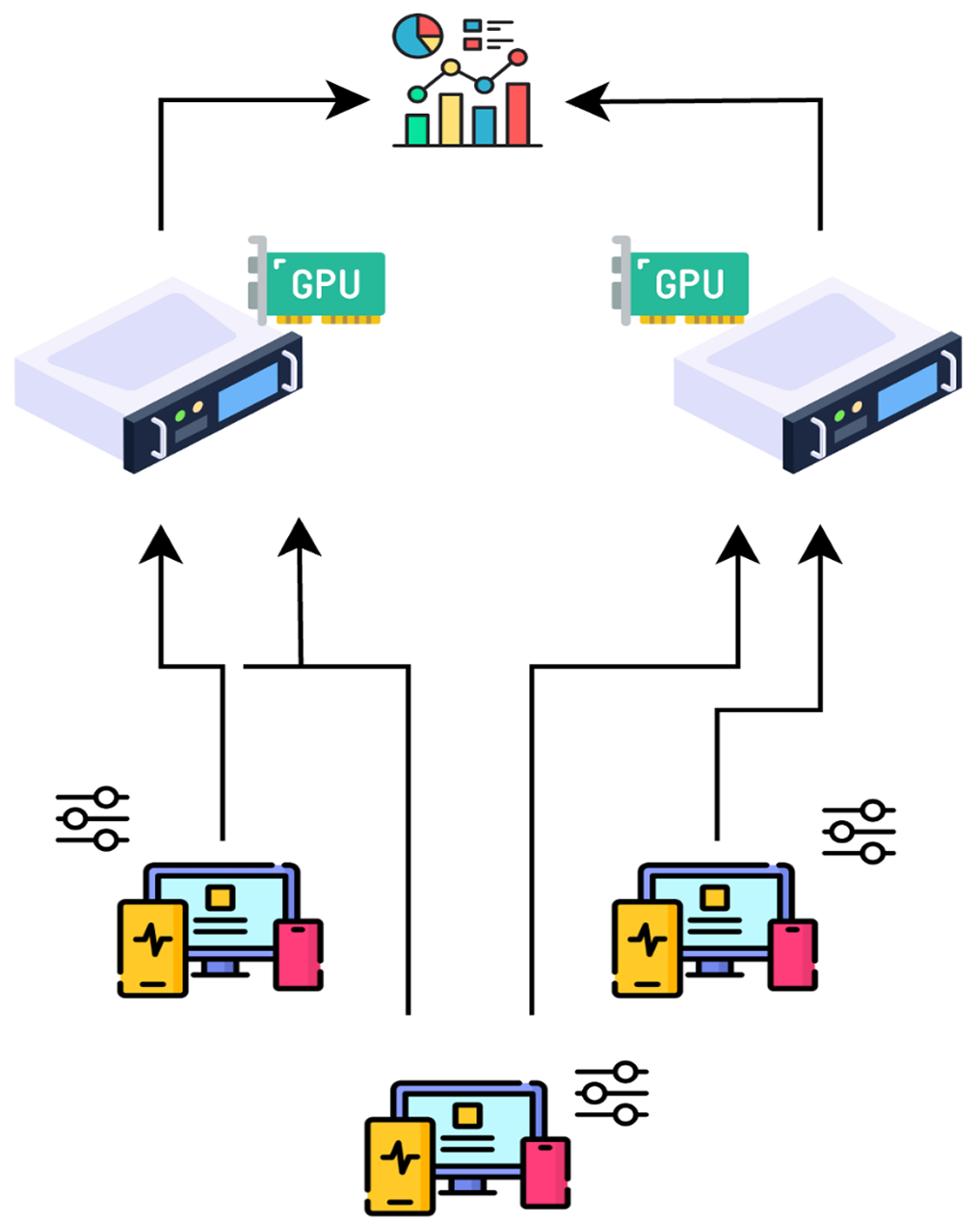

Leverages GPU Acceleration for a Fast Training

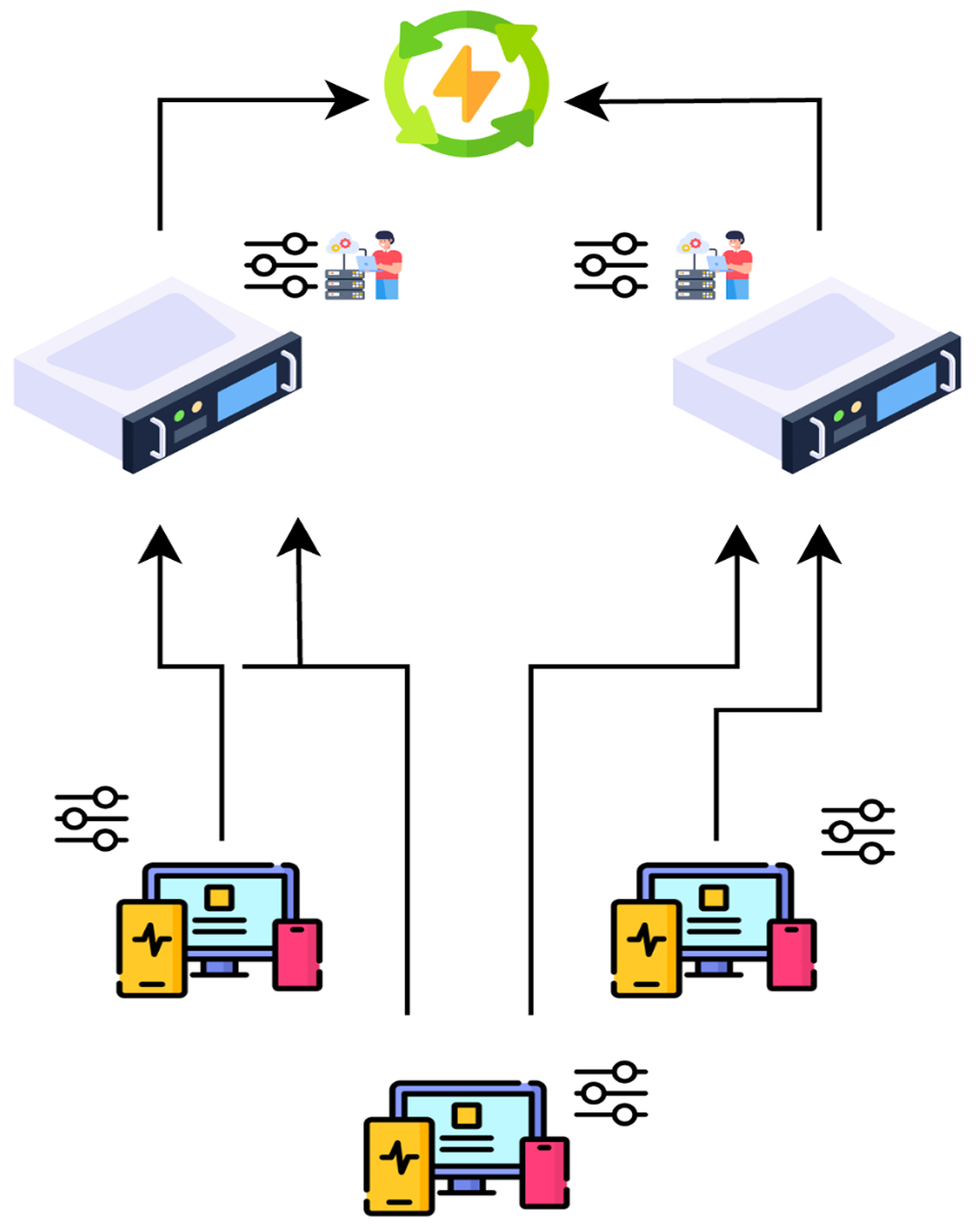

Users can easily configure parameters to optimize performance and security

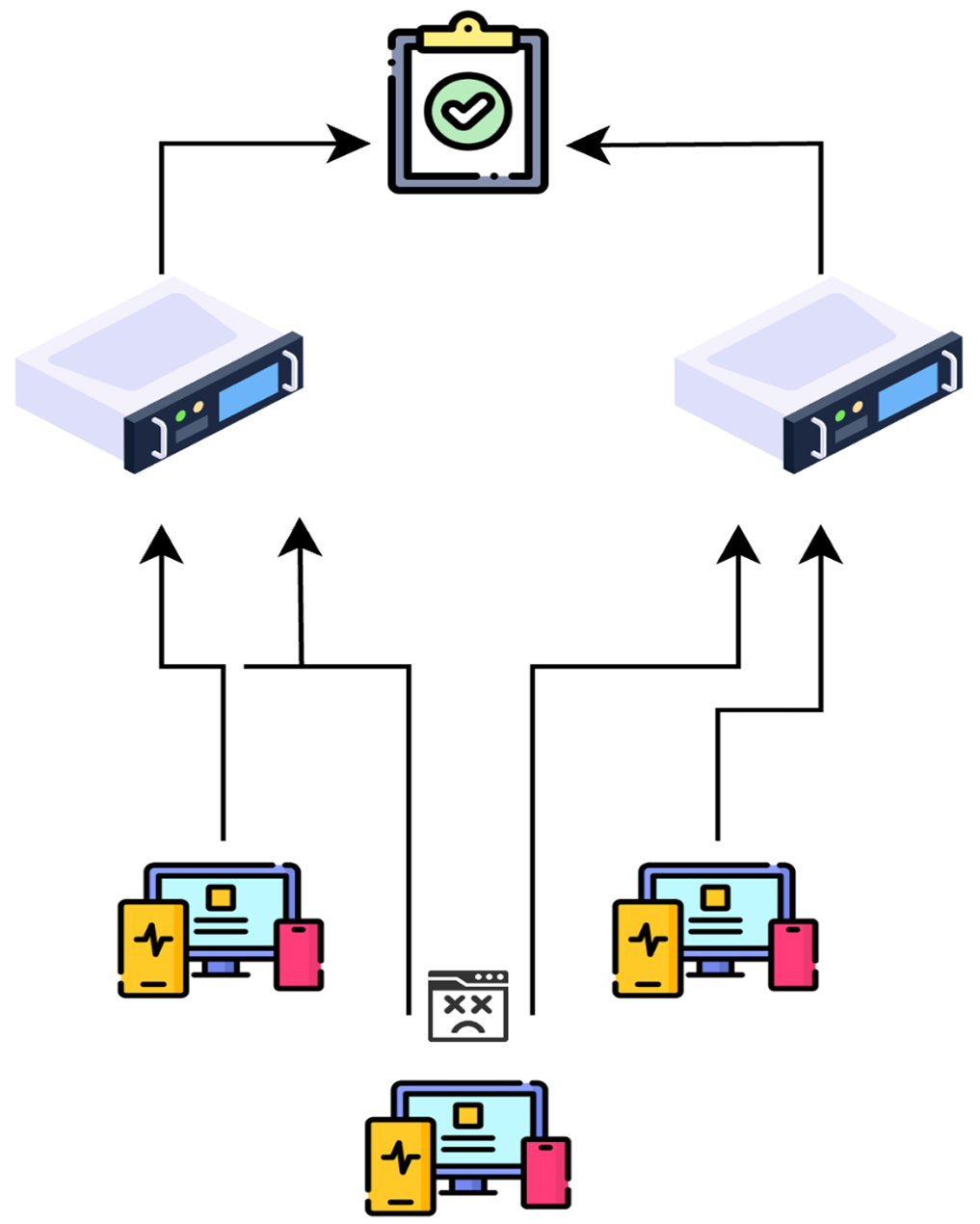

Ensures correct aggregation in the event of dropouts

Enables the communications of models exceeding the 2GB limit set by gRPC

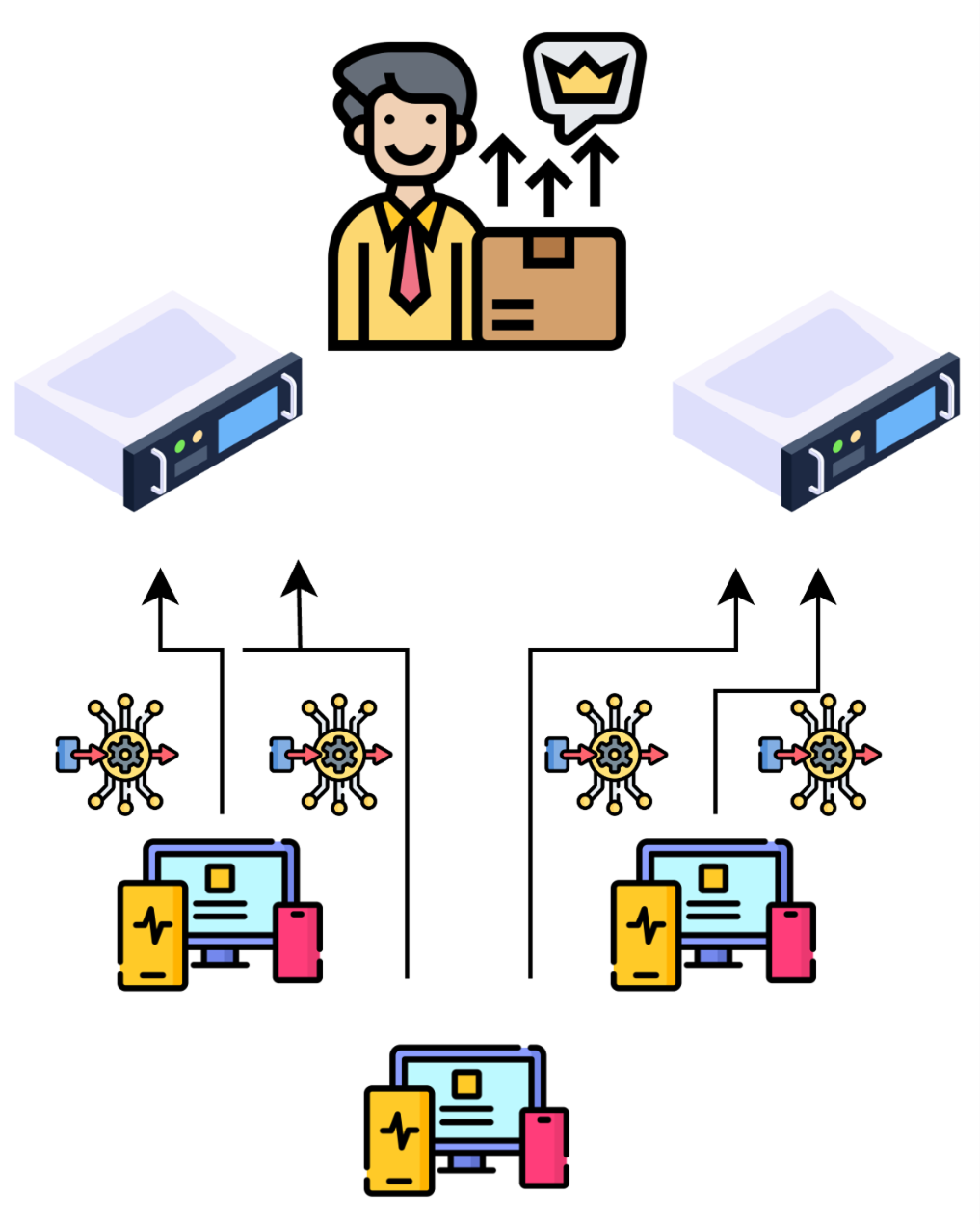

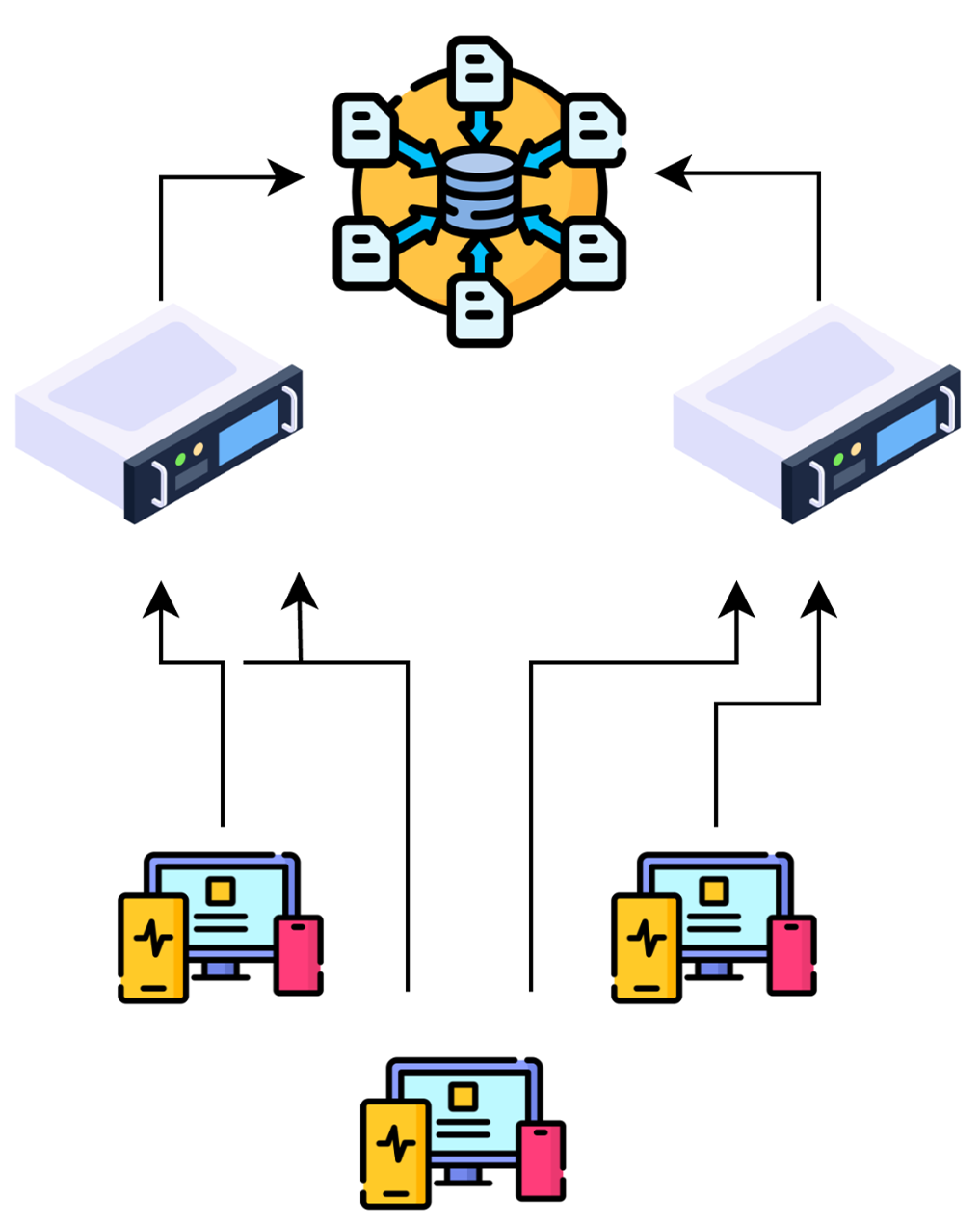

Employs secure multi-party computation techniques for secure aggregation

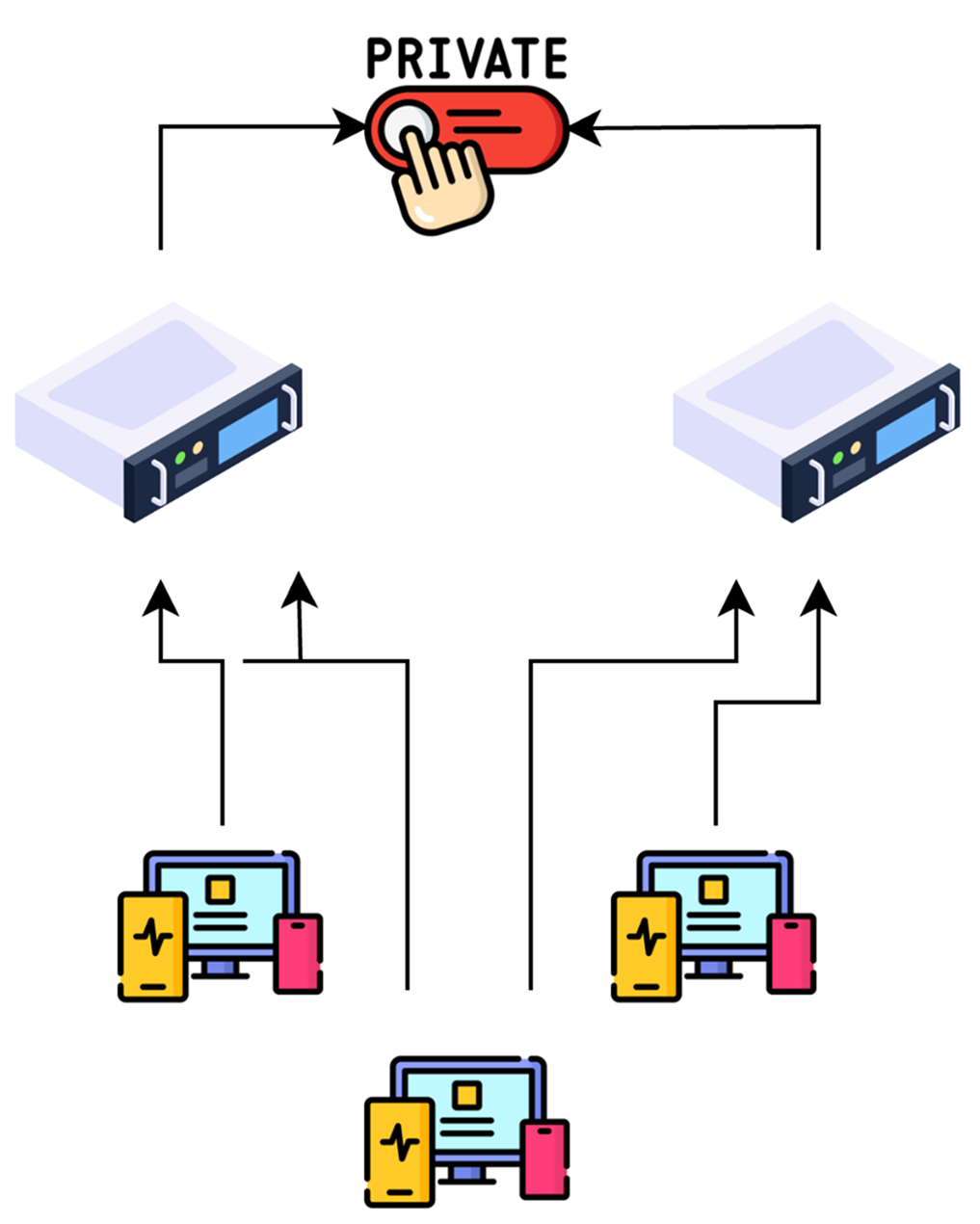

Multiple servers collectively aggregate as opposed to a central aggregator

In 2023, genetic testing giant 23andMe suffered a massive breach,...

May 27, 2025

Business leaders are wary. They urgently want to reap the...

May 27, 2025

Join us on our journey to make federated

approaches available to everyone.